Biased hiring is not just expensive; it is disastrous. It filters out qualified candidates and traps teams in homogeneity, inviting unnecessary trouble. According to the National Center for Biotechnology Information (NCBI), biased processes raise new-hire failure rates by up to 16.1%, which costs around $710 per bad hire.

On the other hand, diverse teams consistently outperform homogeneous ones because companies with greater diversity are more likely to be profitable in all quarters, so it’s undoubtedly a wise decision for business. In this guide, we’ll explore how bias enters the hiring process, why it harms quality, and practical strategies to counter it so you can improve hiring ethics and candidate performance.

TL;DR

- From job ads to interviews and final decisions, bias can appear at every step.

- Common bias types include confirmation bias, name/gender/age bias, culture-fit bias, contrast effects, and automation bias.

- Our eight proven strategies define roles clearly, use structured criteria, conduct blind resume screening, conduct structured interviews, use evidence-based scoring, involve diverse panels, and distinguish between true potential and familiarity.

- With data analytics, behavioral assessments, and data-driven tools, it is possible to objectively highlight skills, values, and core motives.

- Also, learn how to track the diversity of applicant pools and hires, screening pass rates by groups, and performance in terms of measurable progress.

What is Hiring Bias?

Hiring bias occurs when leaders allow irrelevant personal traits like gender, race, age, education, pedigree, and even culturally similar factors that affect their judgment of candidates. As one HR analysis explains, it’s when recruiters allow irrelevant personal traits that influence their evaluation of candidates.

Even well-intentioned hiring managers can fall prey to such biases. Study finds that nearly 70% of hiring decisions are made within the first few minutes of an interview, and most managers admit their choices are influenced by factors unrelated to job performance.

It’s a clear business case for bias reduction when diverse teams drive better results. Research from McKinsey and others shows companies with above-average diversity on executive teams are more likely to outperform on profitability.

Conscious vs unconscious bias in hiring

Requiring hires from only certain schools or explicitly discounting mothers of young children are conscious biases that create specific barriers. Even without ill intent, many biases lurk in the subconscious. Hiring teams often carry unconscious preferences for candidates who feel familiar or likable. These unconscious biases can influence everything from resume screening to interview impressions without being noticed.

Where Bias Enters the Hiring Process

Bias can get in the way of hiring. Here’s how:

1. Job descriptions

Vague or esoteric language can skew the applicant pool, such as using gendered terms and listing excessive must-have criteria, which can subtly repel diverse talent. For instance, adding dozens of bullet-point requirements often discourages candidates who don’t meet every wish-listed reason.

2. Resume screening

Initial screens rely heavily on first impressions. While studying resumes, reviewers might unknowingly favor names, addresses, and academies they are fond of. You can also see a lot of callbacks when the resumes are identical relative to those who don’t share similarity.

3. Interview and evaluation

Unstructured interviews are actually the reason more bias settles in. Personal affinity and first impressions can dominate unnecessarily. For example, asking off-script questions can introduce gender and cultural bias. Interviewers may also unconsciously favor candidates who share a similar background or communication style.

4. Decision-making and debrief

Even after the interviews, bias can influence group discussions. Conformity bias can lead teams to follow the opinions coming from high-status authorities. Anchoring such bias can cause early applicants to set an unfair standard, in which one candidate’s resume may anchor the discussion so firmly that others are judged relative to it. If we aren’t careful, they end up becoming a final decision.

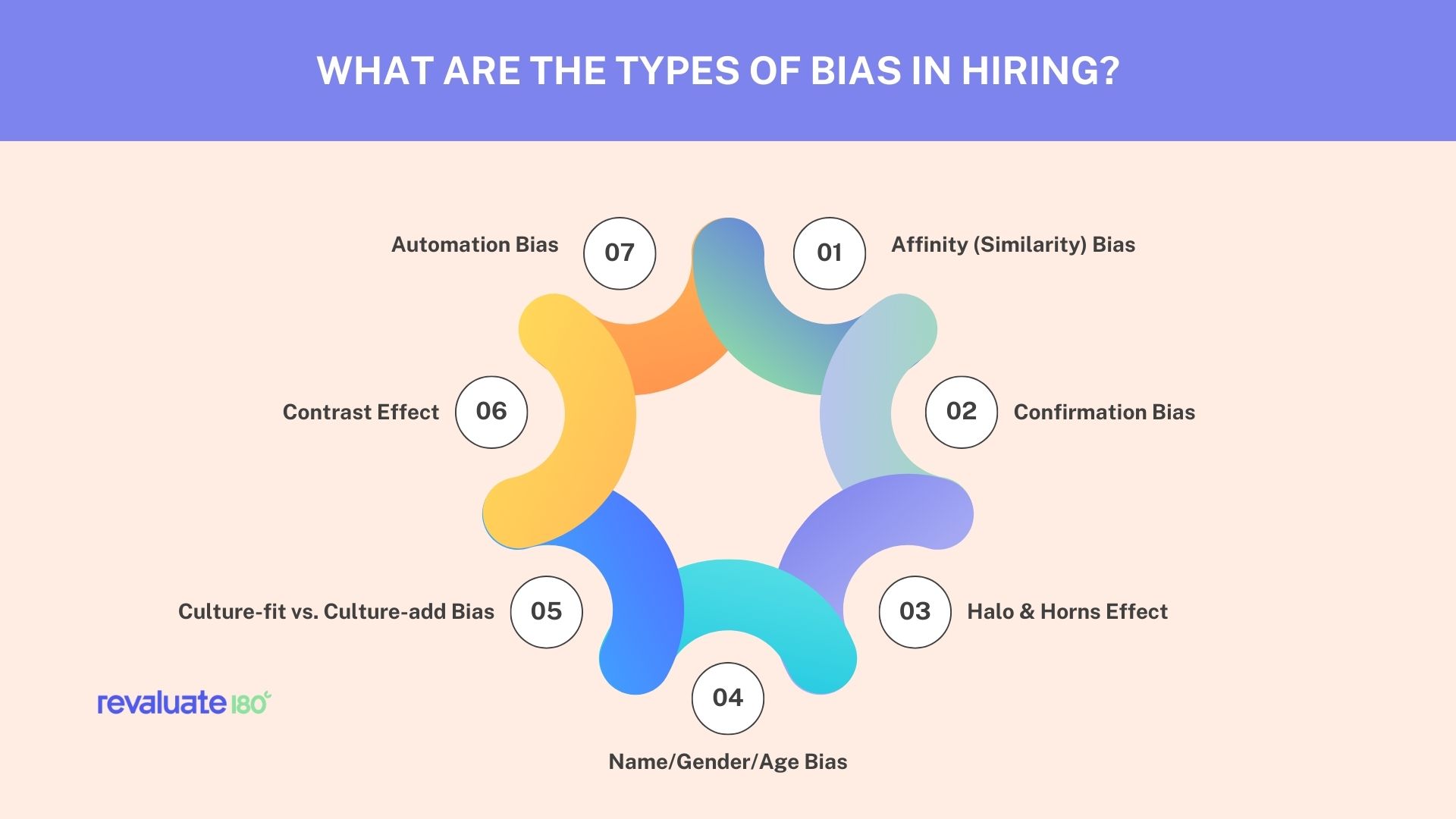

What are the Types of Bias in Hiring?

To reduce bias in the workplace, you must understand the types of biases affecting your teams:

1. Affinity (similarity) bias

It’s a ‘like-me’ bias when interviewers unconsciously favor candidates who share similar traits like alma mater, hometown, interests, or even personality traits. A hiring manager might give extra credit to candidates who attended the same college or share similar hobbies. Harvard Business Review has reported affinity bias as one of the most common biases while hiring, and it aligns with people we perceive as similar to us, which is precisely how it can skew hiring towards homogeneity.

2. Confirmation bias

This bias means your first impression drives everything that follows in the interview. If an interviewer forms an early positive opinion, they seek information that confirms it and overlook the red flags. On the contrary, a poor first impression can taint the rest of the interview. Research on hiring notes: once an opinion is set, subsequent answers are filtered through the lens of confirming those first impressions. This can seriously sink a potential candidacy.

3. Halo and horns effect

The halo effect occurs when a strong positive trait in a candidate leads to rating unrelated traits more favourably. For instance, thinking that someone is articulate means she must be a great leader in every way. On the other hand, the horns effect occurs when a single flaw casts a shadow over everything else. It means, for instance, a single weak handshake can dismiss a highly qualified engineer.

4. Name/gender/age bias

These typically involve demographics implied by their resume. During resume screening, cues like a person’s name, gender, or age can unfairly be judged. For instance, women have historically received fewer callbacks than identically qualified men. Ageism has also unfortunately become common, where tech companies have faced many lawsuits for screening out candidates over 40. Even though everyone denies falling prey to it, automation and human screens often keep replicating it.

5. Culture-fit vs culture-add bias

Culture fit may sound positive, but it often means fitting in with the existing team. If considered too seriously, it becomes a bias toward hiring copies of current staff. For instance, favouring candidates with very similar backgrounds and personalities can risk keeping the team in the comfort zone, where ideas are stifled.

Experts on LinkedIn warn that hiring only for a fit can lead to a team that all look and think alike, which reinforces narrow perspectives. A better approach is Culture-add, where you hire people who align with core values, as well as those who bring fresh perspectives to the table.

6. Contrast Effect

This subtle bias arises from comparing candidates to one another rather than to the required skills for the job. If three mediocre candidates interview in a row, then a strong candidate arrives, they appear very impressive; vice versa. The result is inconsistent standards, where focusing on relative impressions rather than absolute criteria can lead to irrational choices, and this effect must be guarded against.

7. Automation Bias

This occurs when you overtrust your tools. Reliance on software can introduce bias, as many companies use ATS and AI to screen resumes and rank candidates. But these tools are only as fair as the data and rules they’re built upon. For instance, ATSs might unthinkingly drop resumes lacking specific keywords, eliminating strong candidates who phrase skills differently.

This becomes worse when the data is trained on historical hiring, where AI can perpetuate past discrimination by systematically excluding candidates of a specific gender, ethnicity, or background. You can avoid this by validating the outputs and retaining human judgment.

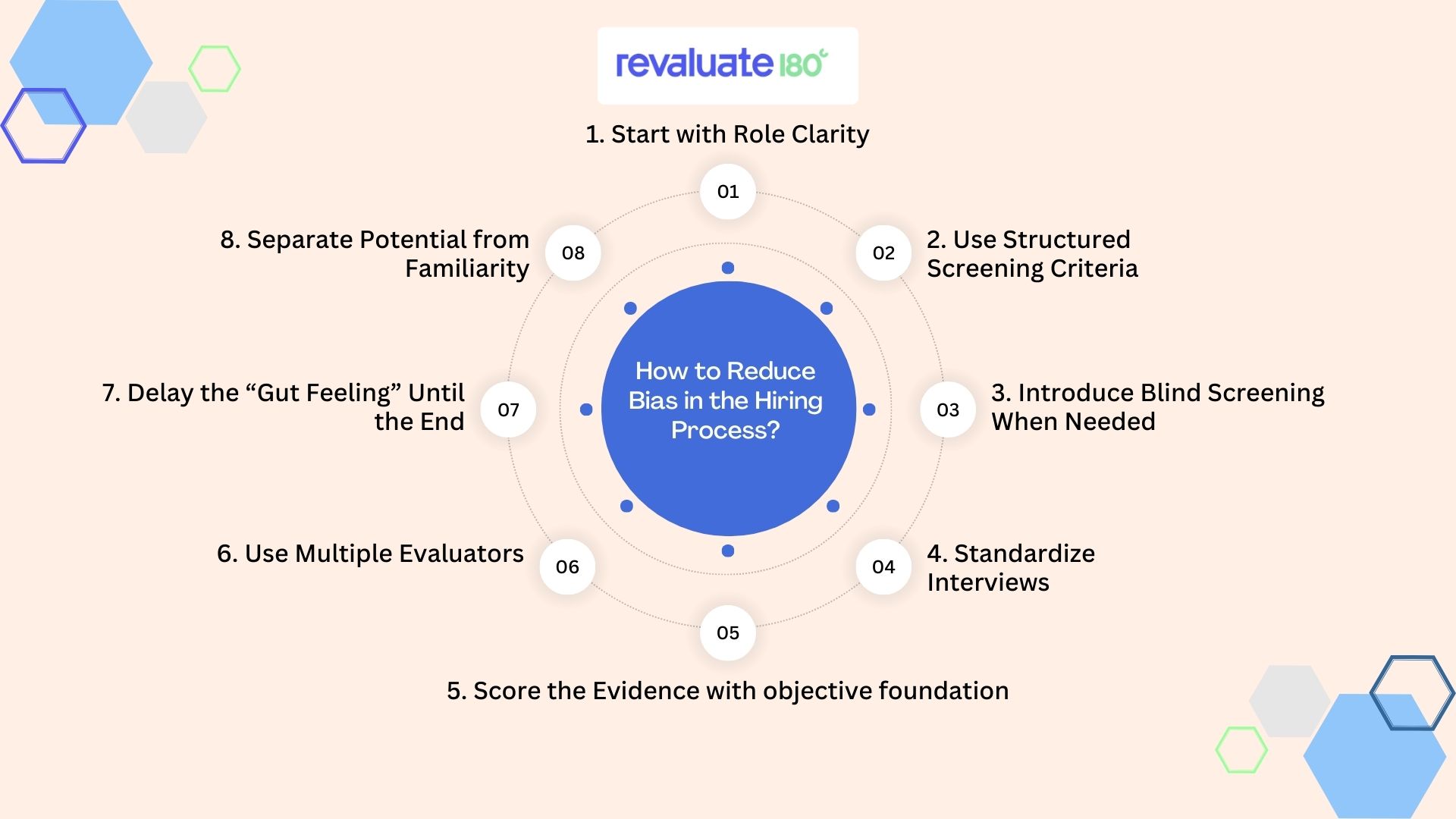

How to Reduce Bias in the Hiring Process?

1. Start with role clarity

Understanding how to hire the right person can set a strong stage. You can start by defining and differentiating between must-have and nice-to-have requirements. This prevents inadvertently screening based on pattern recognition. For example, listing ten non-negotiable requirements often discourages strong candidates who may not check every box. Instead, identify the core competencies and outcomes, and label other skills as optional.

2. Use structured screening criteria

Before reviewing any application, you must establish consistent standards for all candidates. Create resume-review criteria that align with the role’s key skills, and a simple rating system with behavioral anchors. This way, every candidate is evaluated on the same metrics.

For example, explain that seeing a candidate from one’s alma mater or recognizing a name should not come between evaluating them.

3. Introduce blind screening when needed

Anonymous hiring can remove irrelevant cues. In the early stages, we suggested that you redact full names and demographic details from resumes and applications. This typically means hiding the candidate’s name, gender, ethnicity, and institution.

For instance, one implementation of blind hiring yielded more diverse finalist pools without extending time-to-hire. Researchers suggest blind screening helps reviewers rely on qualifications where the goal is balance. This helps remove primary bias triggers while preserving meaningful context.

4. Standardize interviews

Unstructured chats can amplify bias, while structured interviews strategically mitigate it. Have each candidate answer the same set of questions in a similar order within the allotted time to maintain fairness. Use behavioral or situational questions as per role requirements, so that candidates facing an identical format or personal prejudices have less room to sway. Train interviewers by explaining that asking dissimilar questions can invite inconsistency and bias.

5. Score the evidence

Use data to compare candidates rather than gut feelings. For each criterion, define what a 1 to 5-star answer looks like with specific examples and behaviors. You don’t need to accept vague labels like “excellent communicator” without any proof. Instead, you can insist interviewers cite concrete examples to tie their ratings to. Recording precise evidence prevents any single interviewer’s charm or confidence from getting in the way of objective evaluation.

6. Use multiple evaluators

Relying on a single person’s decision is risky, especially when the stakes are high. Involve a diverse panel of at least three interviewers to evaluate each candidate. Each one fills out their own scoreboard, and when a variety of perspectives weigh in, individual biases tend to cancel each other out.

For example, a team might consist of members of different genders, ages, or cultural backgrounds. Something you want to tackle as a solution to a bigger problem. Once all have scored the candidate, you can aggregate the scores and then discuss the choices.

7. Delay the “gut feeling” until the end

You must rely on data and strong evidence at all costs, and then see what your intuition says about it. Ensure you separate evaluation from deliberation, with each interviewer submitting scores and notes. Then, review the compiled data before anyone jumps to any conclusions. This will help prevent the first impression from dominating.

By evaluating the candidates on numbers first, you ensure that any intuition is secondary to the job. One approach is to randomize the interview order and have panelists rate each person individually. Only after the data is set can you debrief.

8. Separate potential from familiarity

Beware of labels like ‘high potential’ or ‘culture fit’ because they can be facades for a comfort bias. True potential is revealed through objective evidence and not a reflection of you. When the interviewer says a candidate reminds them of themselves, it’s often a red flag for bias.

Ask interviewers to justify potential candidates with examples, such as whether they have shown continuous growth. And did they advance despite any barriers? If team leaders suspect a promising hire only because of past familiarity, you have to double-check that assessment against an objective one.

Common Objections to Reducing Bias

1. Structured hiring takes too long?

Redisigning the process requires effort, but the cost of rushing bad hires is far higher. Studies estimate that a bad hire can cost up to 30% of that employee’s first-year salary in recruitment, training, and lost productivity. Structured interviews are the only way to save long-term losses, automate pre-screening tests, and clear scoreboards.

Written notes that standardize assessments help streamline the hiring funnel by quickly identifying the most qualified candidates. Additionally, decision-making becomes faster and more confident when all team members are aligned on the same mission.

2. Do we need culture fit to maintain team harmony?

Value alignment is crucial, but insisting on finding a culture fit has its own risk of shrinking the team into a monoculture. When everyone has the same background and viewpoint, who is going to come up with new ideas? Who is going to bring a new perspective to the cause?

This is precisely why new hires should also add to the motive. Look for a culture add who respects your core values but does not hesitate to bring unique ideas to the table. This is where a mixed team challenges assumptions that are far more resilient and creative.

3. Isn’t our team already diverse enough?

Diversity isn’t a cure alone. True diversity includes various experiences, problem-solving methods, and communication styles that are often cognitively diverse. A team can be diverse in demographics, yet risk falling into a unified way of thinking when solving problems.

If all product managers graduated from the same university, you might miss the next big thing. Therefore, implement the best DEI hiring practices, put on your detective hats, and embrace bias reduction by revealing gaps beyond the visible choices.

How Data and Behavioral Insights Reduce Hiring Bias

1. Structure alone is not enough

There are no rules on how to do things the right way, so changes are vital. But human judgment can always creep back in. For instance, when one tech company extends its candidate pool by requiring managers to interview more diverse applicants, the final hires remain overwhelmingly white. We’re saying that interviewers let their own internal filters creep in unless they’re given better data.

2. Using behavioral data

This is where objective assessments and data insights really help. Tools that measure personality, work ethic, and core values can surprisingly highlight qualities that aren’t easily seen on a resume. Notes that standardized behavioral assessments also help quantify these qualities.

This might involve a short pre-hire questionnaire or simulation that objectively scores how a candidate might behave in certain situations. It’s all about what you collect that matters relative to what you use it for.

3. Predicting fit beyond resumes

This is where the true power lies. By combining multiple data sources from cognitive tests to value alignment scores, you can yield a holistic view of the candidates. You will know if someone’s resume suggests they’re a player, but you will see if they truly are by combining their data.

By calibrating all hires, you can now reduce reliance on a single person’s institution. This approach is ideal because it not only gets you the right hire but also creates a feedback loop that gives you every chance to make it perfect. Blending human insights with behavioral science will help you and your team make consistent, data-backed hiring decisions and fight unnecessary bias.

How to Measure if Your Hiring Efforts are Working?

Implementing all the bias-reduction strategies is just the beginning of the process. You need to measure the impacts to understand what is working and what isn’t. Here’s how:

1. Demographics at each funnel stage

To measure progress, record applicant diversity, interviewees, and hires by demographic segment. A sudden drop-off in a particular group during resume screening can signal bias. Therefore, always aim for smooth proportions throughout the hiring process.

2. Time-to-hire by group

If one demographic systematically takes longer to process, you have to investigate the reason. It can mean anything from fewer callbacks to second rounds.

3. Offer acceptance rates

If your majority-culture candidates accept offers at a much higher rate than others, it suggests your process might feel less welcoming to some groups. Keep an eye on the panel’s decisions, as they can be unintentionally skewed.

4. Performance and retention

Growth hides in the comparative value. So, compare the hiring success rates between the new and old processes. Use metrics such as instant rehireability, which allow managers to gauge performance by re-hiring them. Continually assess the 90-day or one-year retention of the new hires to understand their performance, growth, and your actionable impact. If you see diverse hires leaving before the end of the period, it’s a red flag, so you get the idea.

How Revaluate180 Helps Reduce Bias in Hiring

At Revaluate180, we evaluate candidates on value alignment and potential more than their background similarities. Using our proprietary psychology model and advanced analytics, we measure a person’s values and motivators to find the right fit for the role.

By focusing on underlying behavioral traits, our approach helps surface talent with diverse experiences. Our data-driven assessments apply consistent criteria to all applicants, enabling fairer, more objective hiring decisions.

By grounding discussions in data, hiring teams can focus on fit, potential, and performance rather than assumptions. This leads to more objective decisions, stronger hiring outcomes, and improved long-term retention.

Contact Revaluate180 to build a more inclusive, data-driven hiring strategy.

Fair Hiring is Better Hiring

Reducing bias isn’t a one-and-done fix, but a strategic growth investment. The best part is that making a fair process can help everyone at work. Candidate experiences are aligned with ethics, and your company is rewarded with the best talent in the industry. In the end, our ultimate goal is to get great hires, increased engagement, and productivity with strong, diverse backgrounds.

You can start right away by picking two to three strategies above and implementing them first. Start small and compound from there, because fair hiring isn’t just about compliance; it consistently leads to stronger teams, innovation, and ultimate profits.

Unlock AI-Powered Hiring Analytics

Transform the way you hire with insights that create aligned, collaborative, and high-performing teams.

Smarter Hiring Decisions

Smarter Hiring Decisions

Reduce Expensive Turnover

Reduce Expensive Turnover

AI-Driven Insights

AI-Driven Insights

Optimize Team Performance

Optimize Team Performance

FAQs

1. What is the most common bias in hiring?

Affinity bias, or similarity bias, in other words, is the most ubiquitous of them all. It’s a tendency to favor candidates with similar backgrounds in certain areas.

2. How can bias be reduced in the hiring process?

You can do this by using a structured process that is fair at every stage. It means clear role criteria in job descriptions, standardized resume rubrics, blind screening, structured interviews, and a panel of leaders with diverse opinions.

3. What are the three ways to reduce bias?

You can start by implementing structured interviews and scorecards. Then use blind resume screening to hide identity cues, and finally involve multiple evaluators or a panel that prioritizes diversity while making the final decision.

4. What can be done to reduce selection bias?

Ensure your selection criteria are strictly tied to your precise job requirements. Through blind resume screening, you can eliminate the non-job factors.

5. Can structured interviews really reduce bias?

Absolutely. Studies suggest that the same questions and standardized scoring yield far more reliable candidates and twice the predictive performance as casual interviews.

6. Does AI remove bias from hiring?

Not if you aren’t in charge. AI and ATS can reduce some human bias by helping you in the process, but they’re just as good as the data they’re trained on. The best approach is to use AI as a tool, not a sole decision-maker.

7. How long does it take to see results from bias reduction efforts?

You can see the change in each hiring cycle. You’ll notice improved diversity in the applicant pool, and metrics like time-to-hire or diversity ratios may stabilize over 1-2 hiring cycles. If you’re really committed to the cause, you’ll notice a significant paradigm shift in 6-12 months of consistent practice.

8. Do we need to train our entire hiring team?

Ideally, everyone who contributes to hiring must receive at least bias-awareness training and learn the new process.